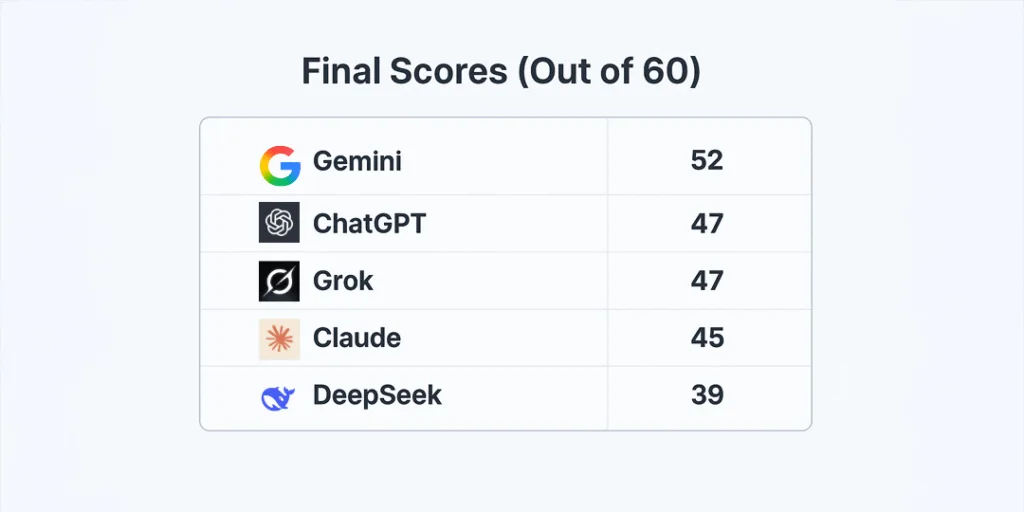

Artificial Intelligence platforms are evolving rapidly, each bringing unique strengths and limitations. To better understand their performance, we conducted a head-to-head comparison of five leading AI systems – ChatGPT, DeepSeek, Gemini, Grok, and Claude across six critical factors: Accuracy, Speed, Clarity, Creativity, Handling Complexity, and Image Generation. Each factor was scored out of 10, providing a total possible score of 60.

1. Accuracy

Prompt: “What is the primary source of energy for Earth’s climate system, and how does it influence global temperatures?”

All models provided correct answers, but Gemini stood out by offering a more detailed explanation, while Claude delivered a reader-friendly, engaging response. ChatGPT and Grok performed solidly, though not outstanding. DeepSeek’s answer lacked depth and reasoning.

2. Speed

Prompt: “List five benefits of recycling in 50 words or less.”

When it came to speed, ChatGPT and Claude were the fastest, responding in around 3-4 seconds. DeepSeek and Gemini were slightly slower, while Grok lagged significantly, taking over 20 seconds.

3. Clarity

Prompt: “Explain how a bicycle works in simple terms, suitable for a 10-year-old.”

Here, Claude excelled by delivering a child-friendly, crystal-clear explanation. Grok and Gemini also provided strong responses, though Gemini’s explanation leaned more technical. ChatGPT and DeepSeek offered average clarity, missing some key details.

4. Creativity

Prompt: “Invent a new mythical creature, describe its appearance, and explain one unique ability it has.”

Most AIs generated familiar fantasy tropes (wings, horns, glowing eyes), but Grok impressed with an original creature and a scientifically creative ability. The others provided imaginative but somewhat predictable responses.

5. Handling complex

Prompt: “If humans could instantly teleport anywhere, what would be three societal impacts in the next decade?”

Gemini excelled by offering detailed examples and diverse implications, while others stuck to surface-level impacts (transport, oil industry, border security). No model earned a perfect score, reflecting the challenge of fully exploring complex scenarios.

6. Image generation

Prompt: “Generate an image of a futuristic city at sunset with flying cars and neon lights.”

This round separated the platforms with image-generation capabilities from those without. Gemini delivered the most realistic and visually stunning result, while ChatGPT produced a more artistic rendering. Grok’s image lacked cohesion, with poorly aligned elements. Claude and DeepSeek do not currently support image generation.

The results reveal clear distinctions:

Gemini emerged as the overall leader, excelling in accuracy, complexity handling, and image generation.

ChatGPT and Grok tied in second place, each offering strong all-round performance with different strengths – ChatGPT in speed and accessibility, Grok in creativity.

Claude shone in clarity and friendliness, making it highly effective for educational or beginner-friendly use cases.

DeepSeek, while capable, lagged behind in depth and functionality, especially without image generation.

Ultimately, Gemini stands out as the most versatile and high-performing AI platform in this comparison, though each AI has distinct advantages depending on the task at hand.