For nearly two decades, Google Maps has guided us with robotic calm: turn left, drive 200 meters, reach your destination. Now, that familiar voice is evolving into something far more intelligent.

With the arrival of Gemini, Google’s flagship AI model, the world’s leading navigation app is becoming conversational, predictive, and contextually aware. The age of static GPS directions is quietly giving way to an era of dynamic, thinking maps.

The intelligent copilot comes alive

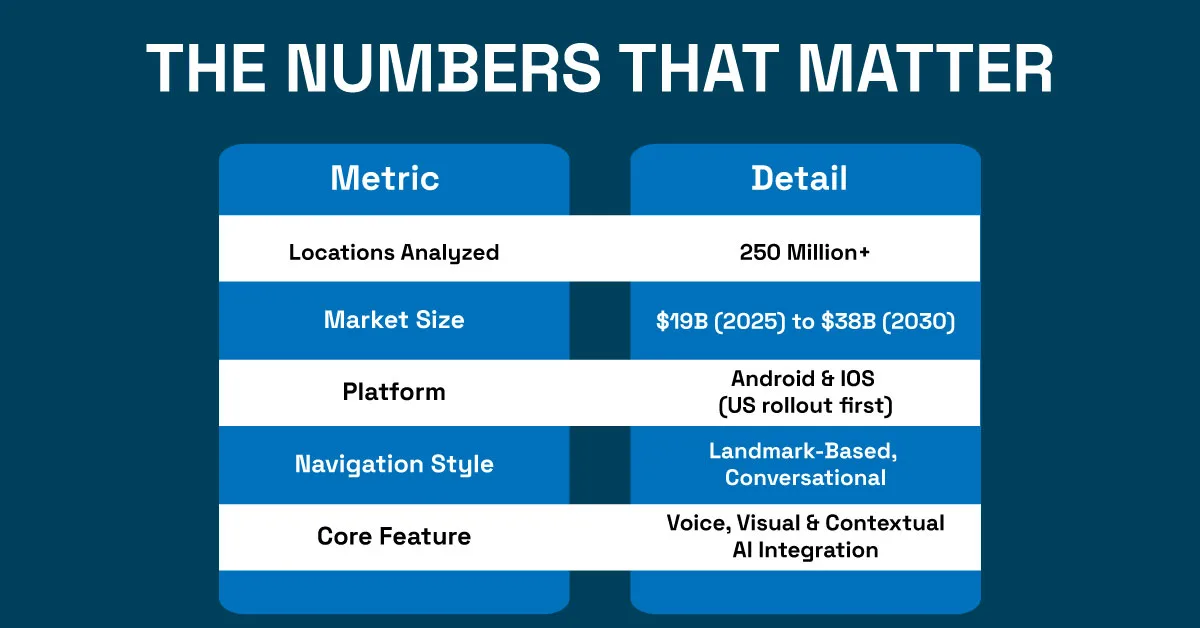

The new Gemini-powered Google Maps acts like a digital travel companion. Drivers can now ask real questions, “Find me a vegan café nearby with parking,” and get a smart, voice-guided response in seconds. The system scans more than 250 million locations, using Street View data to reference real-world landmarks instead of lifeless distance markers.

Google Maps is getting a Gemini upgrade that makes navigating smarter and easier. We’re introducing the first hands-free, conversational driving experience in Google Maps, built with Gemini and our comprehensive information about the real world, said Amanda Leicht Moore (image), Director of Product, Google Maps.

Instead of “turn right in 500 meters,” Gemini now says, “Turn after the Shell station.” It feels human. It feels alive. Users can even report traffic accidents or floods verbally, and the AI adjusts routes instantly. What was once a one-way command line has evolved into a two-way dialogue between driver and map.

Google Map: Seeing through AI’s eyes

The addition of Lens with Gemini transforms how users interact with the physical world. Point your camera at a restaurant, museum, or building, and ask, “What is that place?” or “Is it popular?” The AI identifies it, provides ratings, reviews, and even lets you add events to your calendar, all without typing a word.

In this vision of Google Maps, navigation merges with perception. The app doesn’t just see where you are; it understands what surrounds you. This shift from manual input to AI-driven anticipation changes how people move, search, and decide. It is as if every street corner now holds a conversation waiting to happen.

Strategy behind the map

By integrating Gemini, Google positions itself several steps ahead of rivals like Apple’s Siri and Amazon’s Alexa, both of which still struggle with contextual understanding. The move redefines Google’s core advantage: data. With billions of location-based queries, Google Maps becomes not only a navigation app but also a massive AI laboratory training itself on real-world movement patterns.

The commercial potential is enormous. The embedded AI market, covering navigation, connected vehicles, and smart voice assistants, is projected to rise from $19 billion in 2025 to $38 billion by 2030. Every kilometer traveled through Google Maps is now a data point feeding Google’s machine learning engines.

This convergence of voice, vision, and location marks the beginning of a new dominance strategy, one where AI quietly becomes an unseen co-pilot in everyday life.

The numbers that matter

Would you rather have the old or the new Google Maps?

For users, the new Google Maps feels less like a tool and more like a companion. It no longer waits for typed commands; it listens, responds, and learns. Every instruction carries nuance, emotion, and understanding. And that’s what makes this transformation so profound.

By embedding Gemini at the core of its navigation system, Google isn’t just improving directions; it’s shaping how humans interact with space, speech, and digital systems. The future traveler won’t just be guided; they’ll be understood.